I've implemented a "delayed writes" option for imap-backup to avoid excessive rewrites of the metadata on large mailboxes

Until recently, imap-backup's wrote emails and metadata to disk one-at-a-time. This approach made sense for small folders, and for machines with few resources, but with large folders, it could get very slow.

With mailboxes with hundreds of thousands of messages, backup times were running for a couple of hours.

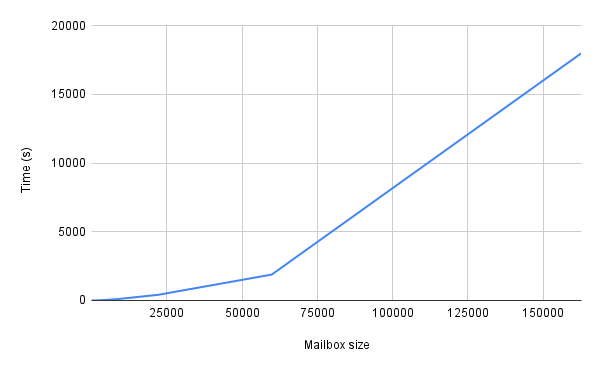

In fact, backup times increased exponentially with mailbox size.

The Cause

imap-backup stores mailbox information in two files. One is a standard MBOXRD flat file of messages. The other is a JSON metadata file, with a .imap extension.

The .imap file records the UID (message id), message flags ('Seen', 'Draft', etc) and the offset and length of the message in the .mbox file.

In order to avoid corruption, both files are written to in a synchronised way, and to roll back on errors. While writing to the .mbox file is a simple append for each message, the .imap file is completely rewritten on every save. So, backing up a 100 000 message mailbox means 100 000 writes to this file, with each write growing slightly larger and requiring JSON serialization of more data!

Transactions

The solution was to hold downloaded metadata in memory, write messages to disk and write the .imap file just once. I've called this the "delayed metadata" strategy.

In order to maintain integrity guarantees for the serialized mailbox, I created a transaction system for the Serializer, to wrap the appends to the mailbox and the metadata file.

download_serializer.transaction do

downloader.run

...

endFrom /lib/imap/backup/acount/folder_backup.rb

If any error or exception occurs, the mailbox is rolled back to its starting point, and no metadata is ever written.

The Other Strategy

While working on this feature, I actually implemented another "delay everything" strategy, which also delayed writing the message data to the mailbox file. Doing so meant holding a lot more data in memory, and resulted in, at most, a marginal speed gain. So after running benchmarks on both strategies, I dropped the "delay everything" strategy - the extra complexity wasn't worth keeping.

Handling Ctrl+C

As, during the transaction, the on-disk data for the mailbox now gets ahead of the serialized metadata, I needed to avoid problems caused by manual interruption of a backup run.

To do this, the program traps SignalExceptions alongside the normal StandardErrors in the mailbox writer and the metadata serializer.

tsx.begin({savepoint: {length: length}}) do

block.call

rescue StandardError => e

message = <<~ERROR

#{self.class} error #{e}

#{e.backtrace.join("\n")}

ERROR

Logger.logger.error message

rollback

raise e

rescue SignalException => e

Logger.logger.error "#{self.class} handling #{e.class}"

rollback

raise e

endFrom lib/imap/backup/serializer/mbox.rb

Trapping SignalExceptions is unusual in Ruby - you should normally just let the program terminate, but in this case I feel doing so is justified in the name of data integrity.

In the mailbox writer, the rollback actually rewinds the file to its starting position

File.open(pathname, File::RDWR | File::CREAT, 0o644) do |f|

f.truncate(length)

endWhile, in the metadata serializer, it just resets itself to the preceding metadata:

@messages = tsx.data[:savepoint][:messages]

@uid_validity = tsx.data[:savepoint][:uid_validity]

Maintaining Two Strategies

As this new system uses more memory that the original one-at-a-time approach, it might have caused problems on smaller systems with limited resources. For example, using a RaspberryPi to back up my email to disk is a common use case for imap-backup.

In order to allow users to keep resource usage to a minimum, if needed, I've kept the original strategy and added a configuration option to choose between the new, faster, strategy, and the old, less resource hungry, one.

Choose a Download Strategy:

1. write straight to disk

2. delay writing metadata <- current

3. help

4. (q) return to main menu

? As the new strategy works best except for the most resource-starved machines, I've made it the default.

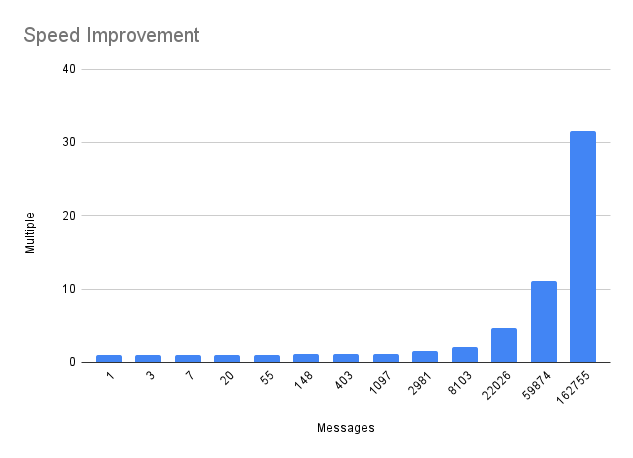

Benchmarks

These benchmarks were run on a Framework Laptop with 16GB RAM and a solid-state disk.

The IMAP server used was a local Docker image running Dovecot.

Mailboxes were filled with the required number of identical messages, each 52 bytes long.

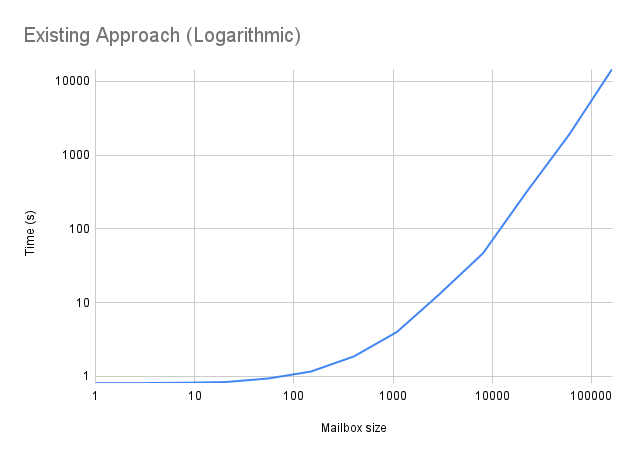

I chose sample sizes that grew exponentially:

1, 3, 7, 20, 55, 148, 403, 1097, 2981, 8103, 22026, 59874, 162755

Doing so means I can plot results on a logarithmic scale and get evenly spaced data points.

Each sample size was measured 4 times and then I took averages.

These are the results for the "straight to disk" strategy:

Count | Run 1 | Run 2 | Run 3 | Run 4 | Average |

|---|---|---|---|---|---|

1 | 0.8107 | 0.8101 | 0.81012 | 0.8159 | 0.8117 |

3 | 0.8103 | 0.8124 | 0.8109 | 0.8107 | 0.8111 |

7 | 0.8104 | 0.8139 | 0.8122 | 0.8391 | 0.8189 |

20 | 0.9109 | 0.8126 | 0.8108 | 0.8103 | 0.8362 |

55 | 0.9112 | 0.9114 | 1.0128 | 0.9123 | 0.9369 |

148 | 1.214 | 1.111 | 1.213 | 1.111 | 1.162 |

403 | 1.816 | 2.015 | 1.814 | 1.816 | 1.865 |

1097 | 4.225 | 3.823 | 3.629 | 4.357 | 4.008 |

2981 | 13.58 | 13.19 | 12.99 | 13.19 | 13.23 |

8103 | 46.80 | 46.62 | 45.59 | 48.13 | 46.78 |

22026 | 379.2 | 308.3 | 280.3 | 269.1 | 309.2 |

59874 | 1892 | 1876 | 1883 | 1904 | 1889 |

162755 | 14710 | 14720 | 14660 | 14380 | 14620 |

While these are the results of the "delayed metadata" strategy:

Count | Run 1 | Run 2 | Run 3 | Run 4 | Average |

|---|---|---|---|---|---|

1 | 0.8096 | 0.9128 | 0.8115 | 0.8109 | 0.8362 |

3 | 0.8102 | 0.8104 | 0.8124 | 0.8114 | 0.8111 |

7 | 0.8110 | 0.8106 | 0.8105 | 0.8108 | 0.8107 |

20 | 0.8107 | 0.8107 | 0.8123 | 0.8108 | 0.8111 |

55 | 0.9129 | 0.9109 | 0.9111 | 0.9107 | 0.9114 |

148 | 1.011 | 1.112 | 1.011 | 1.012 | 1.037 |

403 | 1.614 | 1.714 | 1.617 | 1.614 | 1.640 |

1097 | 3.126 | 3.120 | 3.222 | 4.632 | 3.525 |

2981 | 7.843 | 9.051 | 9.756 | 7.944 | 8.649 |

8103 | 19.59 | 23.61 | 22.86 | 21.10 | 21.79 |

22026 | 69.71 | 70.05 | 65.54 | 60.77 | 66.52 |

59874 | 175.1 | 165.5 | 163.8 | 172.5 | 169.2 |

162755 | 462.1 | 443.2 | 478.1 | 467.0 | 462.6 |

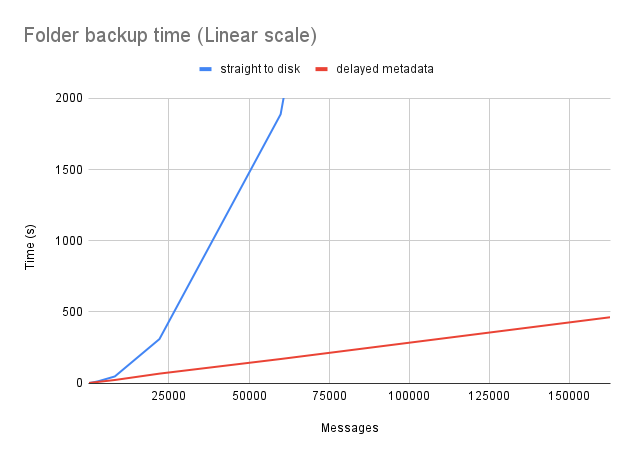

With small mailboxes, times are more or less the same, but as the number of messages grows, the new strategy is much faster:

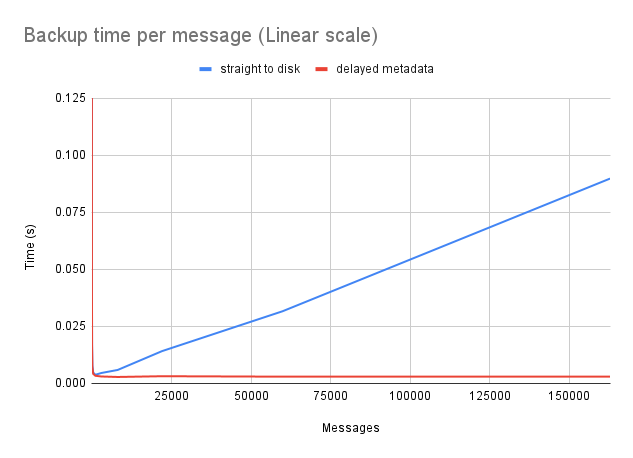

As was clear from the start, re-writing the metadata file each time a new message was added was only going to get worse. In fact, the per message backup time grows linearly with the "straight to disk", while it remains more or less constant with the new strategy:

How Much Faster?

In these benchmarks, while the new strategy changed nothing for small mailboxes, it showed a more than 30x speed improvement for mailboxes with more than 150 000 messages.

It worked!